React is javascript library to build UI application. React is popular and used by many organisations for the development.

Why React is Famous

React is famous because of many features like virtual DOM, routing, lightweight library, modular development and many more. Today we will see virtual DOM topic and this makes react a unique library.

Virtual Document Object Model (DOM)

The Document Object Model (DOM) is a structure of an HTML . Every node is the tree is an element.

When an user action triggers the event, an event updates DOM , now to display correct HTML , DOM needs to do some calculation called as reconciliation . Such operations are performance heavy and applications performance becomes lousy.

Virtual DOM is copy of DOM , which is first updated and the compared against the DOM and only that part is updated which needs to be updated.

As you can see in the below diagram , when action is triggered in the App, Virtual DOM is changed.

Now in second step, Virtual DOM does the reconciliation first and checks the difference with real DOM , and updates the DOM instead of doing direct updates to DOM and only changed part is updated and not entire DOM

Benefits

- Performance efficient

- Consistent programming interface across browsers.

- Only Delta is updated against Full DOM.

Verify if only updated part is re-rendered

Below code is javascript file out of entire project. Entire project setup can be found here at github.

console.log("---loading script js ----");

const jsContainer = document.getElementById('jscontainer');

const reactContainer = document.getElementById('reactContainer');

const render = () => {

jsContainer.innerHTML = `

<div class="demo">

Hello Javascript

<Input/>

<p>${new Date()}</p>

</div>

`;

//note the difference , in native JS we use strings and in React we use //Objects

ReactDOM.render(

React.createElement(

"div", {

className: "demo"

},

"Hello React",

React.createElement("input"),

React.createElement("p", null, new Date().toString())

),

reactContainer

);

}

setInterval(render, 1000);

console.log("completed");

As you can see, we have created a Date element and rendered forcefully every 1 second. One is normal HTML and other is using react. In Normal HTML we have string (innerHTML) and in React we have objects. Now these objects are important in reconciliation and virtual DOM updates.

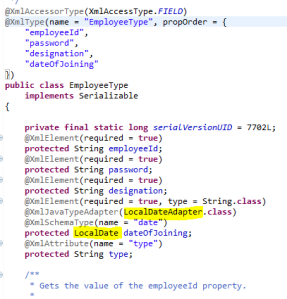

The below image explains the behaviour of delta updates. You can also see video but in case you tube is blocked you can see the images descriptions.

Video showcase for delta updates

Conclusion

- Virtual DOM is key differentiator in react

- Virtual DOM is performance efficient

- It updates only delta part in DOM

- It helps to achieve great UX in Single Page Application.